Unsung Siebel Command Line Heroes: dataexp

When it comes to getting data out of and into a Siebel database, usually the first tools that come to mind are Siebel EAI business services like EAI Siebel Adapter or Enterprise Integration Manager (EIM). While EIM and EAI provide the richest functionality and are popular for good reason, sometimes we just need to transport some records in a table from one Siebel database to the other.

Such scenarios are often found when it comes to working with non-production environments, such as test instances where we quickly want to establish test records and the like.

In this small series, I would like to discuss two command line utilities that have been around ever since (probably since the very early Siebel versions), namely dataexp and dataimp. In this article, we introduce dataexp and in the next we take care about dataimp.

The seasoned Siebel veteran might have seen dataimp being used heavily during Siebel seed data import, while installing or upgrading a Siebel database.

Siebel bookshelf doesn't mention these utilities very often but for 'backup' scenarios (such as for LOVs here). It is important to note that dataexp and dataimp are low-level flat-file tools that are used internally in Siebel CRM and the general recommendation is NOT to use them for purposes other than simple flat-file data backups. So for example if you want to move your account or contact data from production to test, you better use EIM or EAI.

Some years ago, this humble writer was tasked with migrating data from the Siebel sample database to a server environment (aka 'Sybase to Oracle') and I found solace in using dataexp to extract the data from the sample DB and dataimp to import it into the server database.

Exporting data from a Siebel database using dataexp

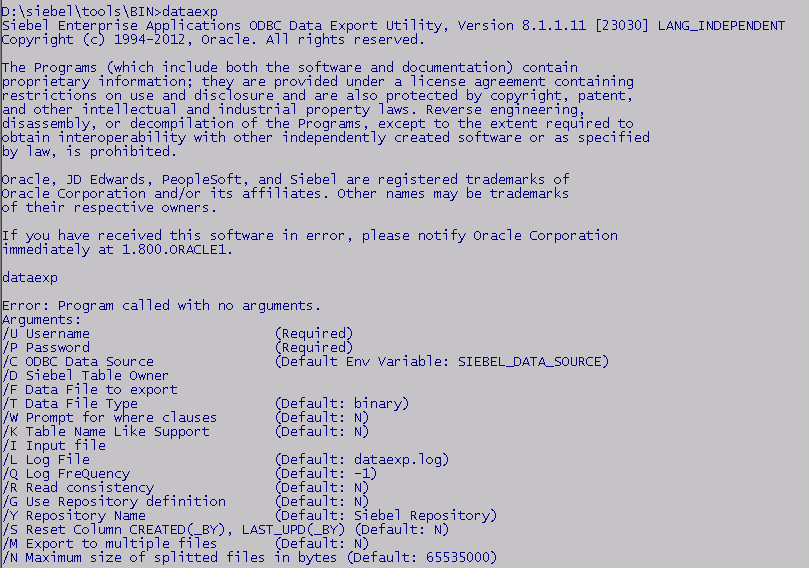

You can find the dataexp (and dataimp) command line utilities in the BIN folders of Siebel Tools or the Siebel Server. First let's fire up dataexp without any arguments to get a list of mandatory and optional input arguments.

Obviously /U (Username) and /P (Password) are required as per the argument list, and I think it wouldn't work without specifying /C (ODBC Data Source) and /D (Siebel Table Owner).

Furthermore, you'll need /F to define the path of the export file (if you don't accept the default).

So a first test command looks like the following.

dataexp /U SADMIN /P SADMIN /C ouisandbox /D SIEBEL

In the above example, the ODBC data source name is 'ouisandbox' which should be replaced by an existing ODBC data source for your target database.

If not specified, the dataexp utility will prompt you to enter the path to the export file (default is dataexp.dat) and then will prompt you to specify the name of a table to export. After entering a table name, the data will be exported to the .dat file and the utility prompts for the next table. If you stop providing table names, the utility will exit.

In the example in the screenshot we exported the S_OPTY and S_OPTY_X tables to a file named test.dat.

If you dare to open the .dat file, you will see a veritable mess of readable and binary data. The utility exports all columns of the table by default in binary format (see below). Not for the faint of heart, and certainly not anything you would want to edit.

So let's explore some of dataexp's specialized options or arguments:

File Output Format

The /T argument allows us to specify the output format (see above for the default (b)inary output). Other options are (c)ompressed or (t)ext.

So this command line will result in a text output file which is much more readable (and editable, at your own risk of course).

dataexp /U SADMIN /P SADMIN /C ouisandbox /D SIEBEL /F d:\test.dat /T text

The (c)ompressed option will render a file that is in fact compressed and much smaller in size.

Where Clause

The /W argument - when set to Y - will instruct the dataexp utility to prompt for a where clause which you can use to apply a filter to your source data, so you don't export all rows.

At the prompt, provide a WHERE clause including the 'WHERE' such as the following example.

WHERE ROW_ID LIKE '1%'

We Like Table Names

The /K argument - when set to Y - allows you to use the % wildcard while specifying the table name. So for example you can enter S_OPTY%X to define all extension tables for S_OPTY as the source.

Using an Input File

If you want to automate the export process, the /I argument comes handy. A valid input file for dataexp is a text file which contains a list of table names (one table per line). For each table you can specify a WHERE clause. Inspecting the seed_locale.inp file which you find in your Siebel folders yields a good example what an input file can look like.

Logging

The default log file is dataexp.log but you can change the path using the /L parameter. The /Q parameter allows to set the logging interval (default is -1, which probably means only log at the end of the process and if you use a value of 1 it turns out to write to the log file every second, slowing down the process considerably).

Reset System Columns

When we set the /S argument to Y, then the following columns will have default values in the export file (not in the source database of course).

CREATED="1980-01-01 00:00:00"

CREATED_BY="0-1"

LAST_UPD="1980-01-01 00:00:00"

LAST_UPD_BY="0-1"

As you probably know, '0-1' is SADMIN's ROW_ID.

Multiple Files

Using the /M parameter - set to Y - we can split the output in multiple files. This one goes together with the /N argument which defines the maximum file size in bytes. If you specify /N, the minimum number of bytes is 2000000.

As a result, you will find multiple output files if the minimum file size is exceeded. They will have a suffix with the file number such as in export.dat.001 and so forth.

Undocumented Arguments

For the following arguments there is not much information available, so let's consider them for internal use.

/R Read consistency (Default: N): Probably dealing with dirty reads.

/G Use Repository definition (Default: N): Probably used with /Y to read repository metadata

/Y Repository Name (Default: Siebel Repository)

Summary

The dataexp command line utility can be put to good use for simple flat-data backups from single tables. Again, please heed the warning that this is NOT fully documented and should NOT replace your usual toys for integrating Siebel with other applications. Rather, see it as a Siebel-to-Siebel tool which is mostly used internally.

have a nice day

@lex

Such scenarios are often found when it comes to working with non-production environments, such as test instances where we quickly want to establish test records and the like.

In this small series, I would like to discuss two command line utilities that have been around ever since (probably since the very early Siebel versions), namely dataexp and dataimp. In this article, we introduce dataexp and in the next we take care about dataimp.

The seasoned Siebel veteran might have seen dataimp being used heavily during Siebel seed data import, while installing or upgrading a Siebel database.

Siebel bookshelf doesn't mention these utilities very often but for 'backup' scenarios (such as for LOVs here). It is important to note that dataexp and dataimp are low-level flat-file tools that are used internally in Siebel CRM and the general recommendation is NOT to use them for purposes other than simple flat-file data backups. So for example if you want to move your account or contact data from production to test, you better use EIM or EAI.

Some years ago, this humble writer was tasked with migrating data from the Siebel sample database to a server environment (aka 'Sybase to Oracle') and I found solace in using dataexp to extract the data from the sample DB and dataimp to import it into the server database.

Exporting data from a Siebel database using dataexp

You can find the dataexp (and dataimp) command line utilities in the BIN folders of Siebel Tools or the Siebel Server. First let's fire up dataexp without any arguments to get a list of mandatory and optional input arguments.

Obviously /U (Username) and /P (Password) are required as per the argument list, and I think it wouldn't work without specifying /C (ODBC Data Source) and /D (Siebel Table Owner).

Furthermore, you'll need /F to define the path of the export file (if you don't accept the default).

So a first test command looks like the following.

dataexp /U SADMIN /P SADMIN /C ouisandbox /D SIEBEL

In the above example, the ODBC data source name is 'ouisandbox' which should be replaced by an existing ODBC data source for your target database.

If not specified, the dataexp utility will prompt you to enter the path to the export file (default is dataexp.dat) and then will prompt you to specify the name of a table to export. After entering a table name, the data will be exported to the .dat file and the utility prompts for the next table. If you stop providing table names, the utility will exit.

In the example in the screenshot we exported the S_OPTY and S_OPTY_X tables to a file named test.dat.

If you dare to open the .dat file, you will see a veritable mess of readable and binary data. The utility exports all columns of the table by default in binary format (see below). Not for the faint of heart, and certainly not anything you would want to edit.

So let's explore some of dataexp's specialized options or arguments:

File Output Format

The /T argument allows us to specify the output format (see above for the default (b)inary output). Other options are (c)ompressed or (t)ext.

So this command line will result in a text output file which is much more readable (and editable, at your own risk of course).

dataexp /U SADMIN /P SADMIN /C ouisandbox /D SIEBEL /F d:\test.dat /T text

The (c)ompressed option will render a file that is in fact compressed and much smaller in size.

Where Clause

The /W argument - when set to Y - will instruct the dataexp utility to prompt for a where clause which you can use to apply a filter to your source data, so you don't export all rows.

At the prompt, provide a WHERE clause including the 'WHERE' such as the following example.

WHERE ROW_ID LIKE '1%'

We Like Table Names

The /K argument - when set to Y - allows you to use the % wildcard while specifying the table name. So for example you can enter S_OPTY%X to define all extension tables for S_OPTY as the source.

Using an Input File

If you want to automate the export process, the /I argument comes handy. A valid input file for dataexp is a text file which contains a list of table names (one table per line). For each table you can specify a WHERE clause. Inspecting the seed_locale.inp file which you find in your Siebel folders yields a good example what an input file can look like.

Logging

The default log file is dataexp.log but you can change the path using the /L parameter. The /Q parameter allows to set the logging interval (default is -1, which probably means only log at the end of the process and if you use a value of 1 it turns out to write to the log file every second, slowing down the process considerably).

Reset System Columns

When we set the /S argument to Y, then the following columns will have default values in the export file (not in the source database of course).

CREATED="1980-01-01 00:00:00"

CREATED_BY="0-1"

LAST_UPD="1980-01-01 00:00:00"

LAST_UPD_BY="0-1"

As you probably know, '0-1' is SADMIN's ROW_ID.

Multiple Files

Using the /M parameter - set to Y - we can split the output in multiple files. This one goes together with the /N argument which defines the maximum file size in bytes. If you specify /N, the minimum number of bytes is 2000000.

As a result, you will find multiple output files if the minimum file size is exceeded. They will have a suffix with the file number such as in export.dat.001 and so forth.

Undocumented Arguments

For the following arguments there is not much information available, so let's consider them for internal use.

/R Read consistency (Default: N): Probably dealing with dirty reads.

/G Use Repository definition (Default: N): Probably used with /Y to read repository metadata

/Y Repository Name (Default: Siebel Repository)

Summary

The dataexp command line utility can be put to good use for simple flat-data backups from single tables. Again, please heed the warning that this is NOT fully documented and should NOT replace your usual toys for integrating Siebel with other applications. Rather, see it as a Siebel-to-Siebel tool which is mostly used internally.

have a nice day

@lex

תגובות

הוסף רשומת תגובה